While working on a CI/CD pipeline recently, we encountered some flakey unit test failures. Random unit tests for a Rails app would fail intermittently with strange errors ranging from null objects, timeouts, and strange logic errors. The problem turned out to be that the EC2 instances running the tests did not have enough compute to handle the load, but a key metric that helped us identify this was stolen CPU time instead of CPU utilization. Have you never heard of stolen CPU time before? No problem. This post explains what it is and how burstable AWS EC2 instances work. This post will focus in on the concept for Linux. Similar metrics can be obtained for Windows (known as CPU ready) as discussed here.

What is CPU Steal Time?

Virtual machines running on a host machine are all managed by a hypervisor. The hypervisor makes it possible to share the underlying resources (e.g. CPU, memory) amongst the different virtual machines. It can further impose limits on specified virtual machines, such as restrictions on the amount of CPU compute they can use.

CPU steal time is a measure of the time the virtual CPU(s) in a virtual machine spends involuntarily waiting, because it has gone over its allocated resources. This could happen either due to the hypervisor-imposed resource limits, or if there are no spare CPU cycles on the host machine (e.g. too many virtual machines on the same host). Conveniently, CPU steal time is made available from the hypervisor to the guest machines, so you can view this information directly from the virtual OSes provided they are virtualization-aware.

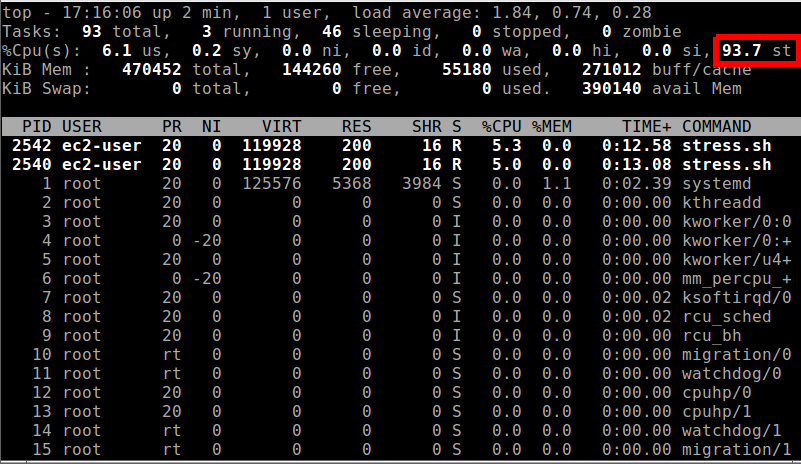

You can view the CPU steal time on Linux using the top command. In the screenshot below, the CPU steal time is currently 93.7:

AWS EC2 Instances

Regular EC2 instances provide a fixed number of vCPUs - the number of vCPUs you get per instance varies depending on the instance type. A vCPU is:

"a thread of either an Intel Xeon core or an AMD EPYC core, except for A1

instances, T2 instances, and m3.medium."

(source: AWS EC2)

Thus, you should expect to be able to use up the full compute power of these vCPUs, and the CPU steal time should be zero.

AWS also offers a set of burstable performance instances which include the T2, T3, and T3a instance types. Compute works on these instance types using the concept of CPU credits. The main idea is that while these instances sit idle, they accrue CPU credits, and when there’s actual compute to be done, they spend their accrued CPU credits. This nicely suits many types of applications, and thus burstable instances are very popular.

However, what happens when burstable performance instances run out of CPU credits? The AWS docs explain that their “performance is gradually lowered to the baseline performance level”. The baseline performance level is defined as a percentage of a vCPU. For example, a t3.micro’s baseline performance level per vCPU is 10%. This means that if a t3.micro runs out of CPU credits, it will run at only 10% of a full vCPU’s performance level. 1

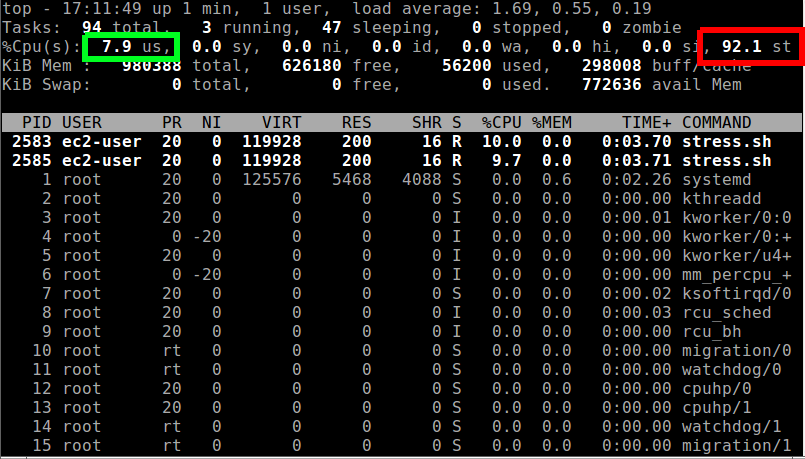

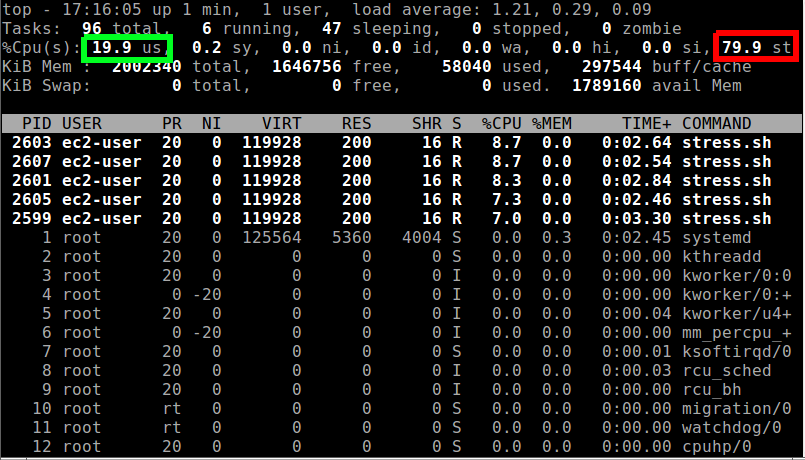

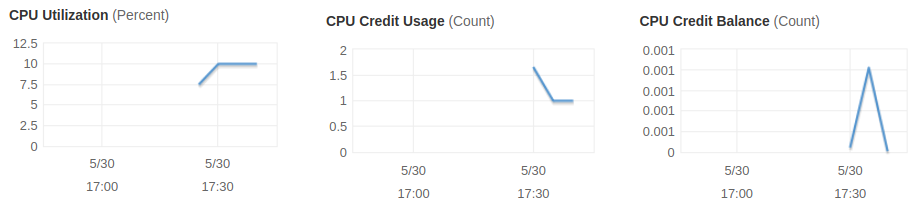

In the screenshots below, we’ve launched a t3.micro (10% baseline performance) and a t3.small (20% baseline performance) and run a stress test script to fully load the vCPUs. You can see that the CPU steal time (in red) is approximately 90% for the t3.micro and 80% for the t3.small, which matches up to the baseline performance numbers. Also notice that the CPU time spent running user processes are around 10% and 20% (circled in green) for the t3.micro and t3.small instances, respectively. Additionally, we’ve provided the CloudWatch metrics for the t3.micro, showing only 10% CPU utilization, but a zero CPU credit balance.

Conclusion

After switching the CI/CD workers from burstable performance instances to regular fixed performance instances, we no longer saw the flaky unit test failures. We then examined and optimized the workers so that they used less CPU compute overall and were able to eventually switch back to burstable instances as they were now able to accrue CPU credits.

Therefore, when using burstable instances, be sure to check the CPU steal time and that you have enough CPU credits.

-

t3 instances by default run using “unlimited” credits. We disabled that option when generating screenshots for this article.) ↩

Comments (0 responses)